Miniworld is being maintained by the Farama Foundation (https://farama.org/project_standards). See the Project Roadmap for details regarding the long-term plans.

Contents:

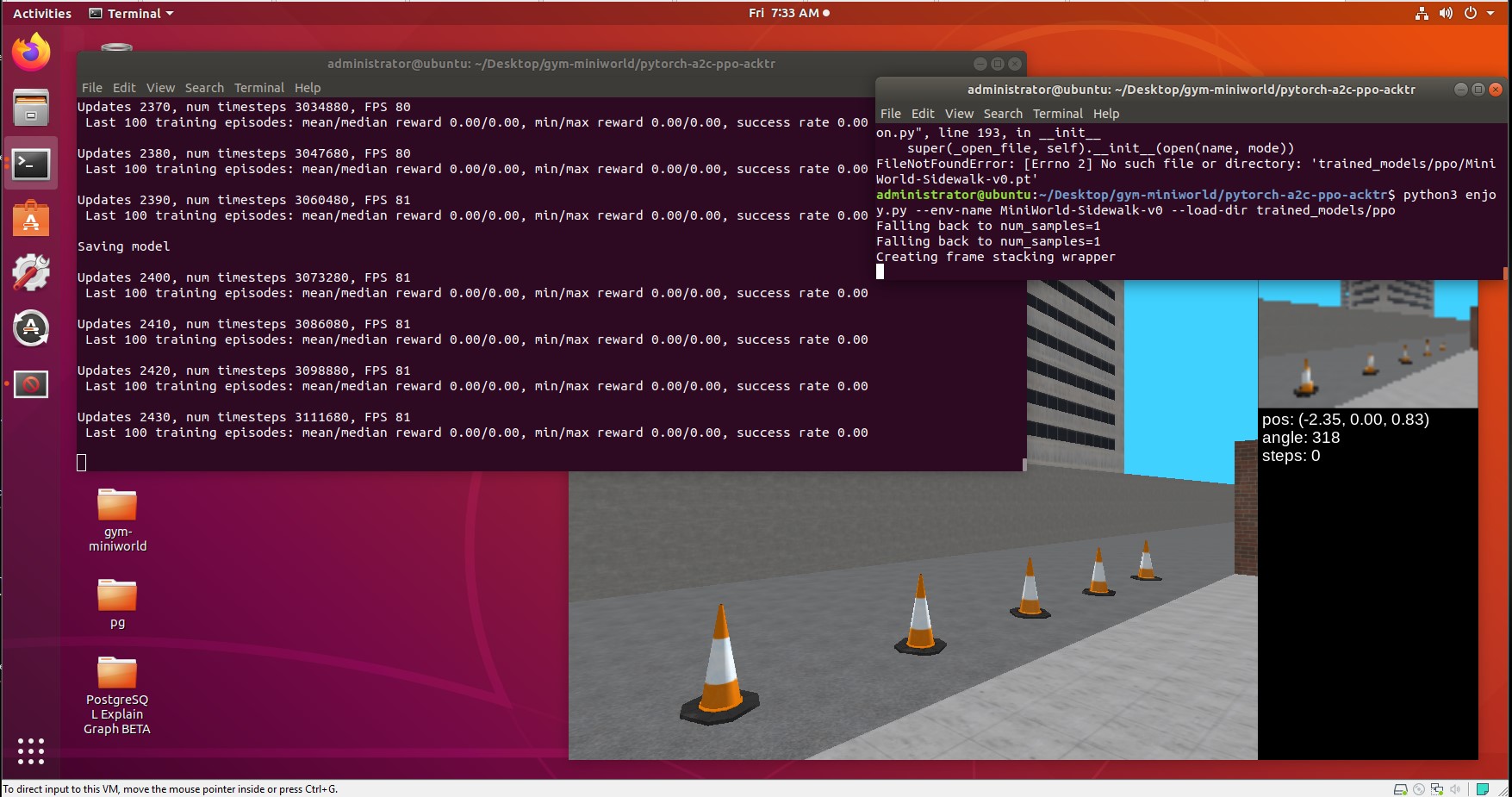

MiniWorld is a minimalistic 3D interior environment simulator for reinforcement learning & robotics research. It can be used to simulate environments with rooms, doors, hallways and various objects (eg: office and home environments, mazes). MiniWorld can be seen as a simpler alternative to VizDoom or DMLab. It is written 100% in Python and designed to be easily modified or extended by students.

Features:

- Few dependencies, less likely to break, easy to install

- Easy to create your own levels, or modify existing ones

- Good performance, high frame rate, support for multiple processes

- Lightweight, small download, low memory requirements

- Provided under a permissive MIT license

- Comes with a variety of free 3D models and textures

- Fully observable top-down/overhead view available

- Domain randomization support, for sim-to-real transfer

- Ability to display alphanumeric strings on walls

- Ability to produce depth maps matching camera images (RGB-D)

Limitations:

- Graphics are basic, nowhere near photorealism

- Physics are very basic, not sufficient for robot arms or manipulation

List of publications & submissions using MiniWorld (please open a pull request to add missing entries):

- Decoupling Exploration and Exploitation for Meta-Reinforcement Learning without Sacrifices (Stanford University, ICML 2021)

- Rank the Episodes: A Simple Approach for Exploration in Procedurally-Generated Environments (Texas A&M University, Kuai Inc., ICLR 2021)

- DeepAveragers: Offline Reinforcement Learning by Solving Derived Non-Parametric MDPs (NeurIPS Offline RL Workshop, Oct 2020)

- Pre-trained Word Embeddings for Goal-conditional Transfer Learning in Reinforcement Learning (University of Antwerp, Jul 2020, ICML 2020 LaReL Workshop)

- Temporal Abstraction with Interest Functions (Mila, Feb 2020, AAAI 2020)

- Addressing Sample Complexity in Visual Tasks Using Hindsight Experience Replay and Hallucinatory GANs (Offworld Inc, Georgia Tech, UC Berkeley, ICML 2019 Workshop RL4RealLife)

- Avoidance Learning Using Observational Reinforcement Learning (Mila, McGill, Sept 2019)

- Visual Hindsight Experience Replay (Georgia Tech, UC Berkeley, Jan 2019)

This simulator was created as part of work done at Mila.

Requirements:

- Python 3.7+

- Gymnasium

- NumPy

- Pyglet (OpenGL 3D graphics)

- GPU for 3D graphics acceleration (optional)

You can install it from PyPI using:

python3 -m pip install miniworldYou can also install from source:

git clone https://github.com/Farama-Foundation/Miniworld.git

cd Miniworld

python3 -m pip install -e .If you run into any problems, please take a look at the troubleshooting guide.

There is a simple UI application which allows you to control the simulation or real robot manually.

The manual_control.py application will launch the Gym environment, display camera images and send actions

(keyboard commands) back to the simulator or robot. The --env-name argument specifies which environment to load.

See the list of available environments for more information.

./manual_control.py --env-name MiniWorld-Hallway-v0

# Display an overhead view of the environment

./manual_control.py --env-name MiniWorld-Hallway-v0 --top_view

There is also a script to run automated tests (run_tests.py) and a script to gather performance metrics (benchmark.py).

When running MiniWorld on a cluster or in a Colab environment, you need to render to an offscreen display. You can

run gym-miniworld offscreen by setting the environment variable PYOPENGL_PLATFORM to egl before running MiniWorld, e.g.

PYOPENGL_PLATFORM=egl python3 your_script.py

Alternatively, if this doesn't work, you can also try running MiniWorld with xvfb, e.g.

xvfb-run -a -s "-screen 0 1024x768x24 -ac +extension GLX +render -noreset" python3 your_script.py

To cite this project please use:

@article{MinigridMiniworld23,

author = {Maxime Chevalier-Boisvert and Bolun Dai and Mark Towers and Rodrigo de Lazcano and Lucas Willems and Salem Lahlou and Suman Pal and Pablo Samuel Castro and Jordan Terry},

title = {Minigrid \& Miniworld: Modular \& Customizable Reinforcement Learning Environments for Goal-Oriented Tasks},

journal = {CoRR},

volume = {abs/2306.13831},

year = {2023},

}