Peng Jiang1, Philip Osteen2, Maggie Wigness2 and Srikanth Saripalli1

1. Texas A&M University; 2. CCDC Army Research Laboratory

[Website] [Paper] [Github]

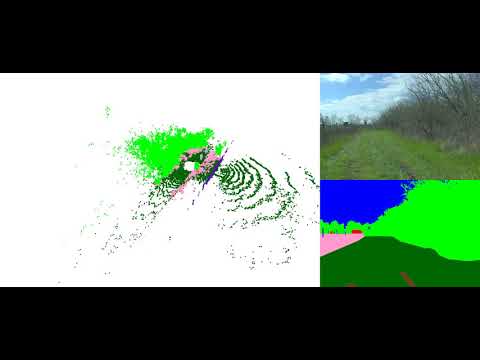

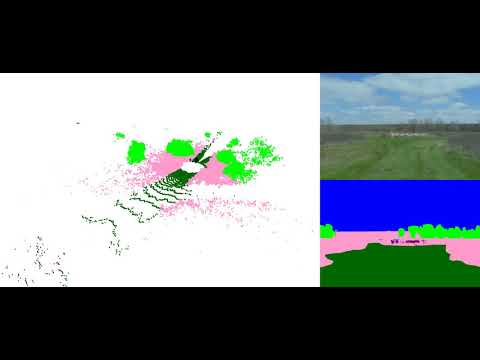

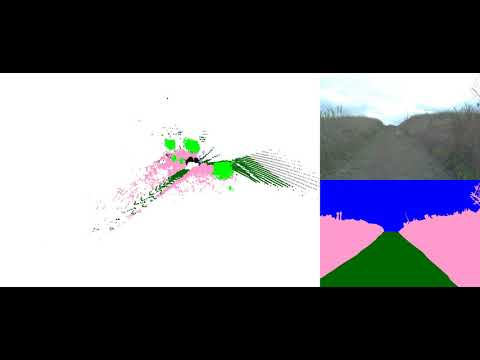

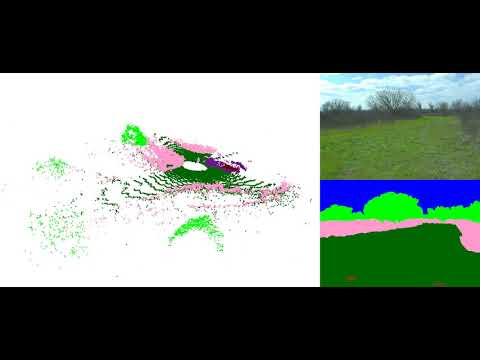

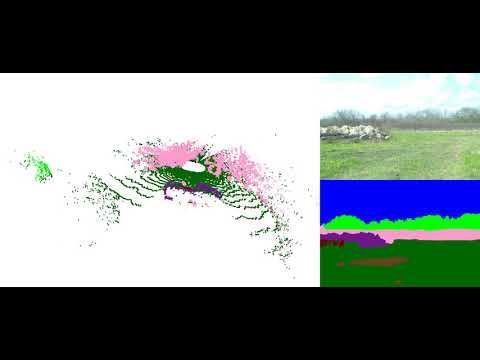

Semantic scene understanding is crucial for robust and safe autonomous navigation, particularly so in off-road environments. Recent deep learning advances for 3D semantic segmentation rely heavily on large sets of training data; however, existing autonomy datasets represent urban environments or lack multimodal off-road data. We fill this gap with RELLIS-3D, a multimodal dataset collected in an off-road environment containing annotations for 13,556 LiDAR scans and 6,235 images. The data was collected on the Rellis Campus of Texas A&M University and presents challenges to existing algorithms related to class imbalance and environmental topography. Additionally, we evaluate the current state of the art deep learning semantic segmentation models on this dataset. Experimental results show that RELLIS-3D presents challenges for algorithms designed for segmentation in urban environments. Except for the annotated data, the dataset also provides full-stack sensor data in ROS bag format, including RGB camera images, LiDAR point clouds, a pair of stereo images, high-precision GPS measurement, and IMU data. This novel dataset provides the resources needed by researchers to develop more advanced algorithms and investigate new research directions to enhance autonomous navigation in off-road environments.

- 64 channels Lidar: Ouster OS1

- 32 Channels Lidar: Velodyne Ultra Puck

- 3D Stereo Camera: Nerian Karmin2 + Nerian SceneScan

- RGB Camera: Basler acA1920-50gc + Edmund Optics 16mm/F1.8 86-571

- Inertial Navigation System (GPS/IMU): Vectornav VN-300 Dual Antenna GNSS/INS

With the goal of providing multi-modal data to enhance autonomous off-road navigation, we defined an ontology of object and terrain classes, which largely derives from the RUGD dataset but also includes unique terrain and object classes not present in RUGD. Specifically, sequences from this dataset includes classes such as mud, man-made barriers, and rubble piles. Additionally, this dataset provides a finer-grained class structure for water sources, i.e., puddle and deep water, as these two classes present different traversability scenarios for most robotic platforms. Overall, 20 classes (including void class) are present in the data.

Ontology Definition (Download 18KB)

Image with Annotation Examples (Download 3MB)

Full Images (Download 11GB)

Full Image Annotations (Download 94MB)

Image Split File (44KB)

LiDAR with Annotation Examples (Download 24MB)

LiDAR with Color Annotation PLY Format (Download 26GB)

The header of the PLY file is described as followed:

element vertex

property float x

property float y

property float z

property float intensity

property uint t

property ushort reflectivity

property uchar ring

property ushort noise

property uint range

property uchar label

property uchar red

property uchar green

property uchar blue

To visualize the color of the ply file, please use CloudCompare or Open3D. Meshlab has problem to visualize the color.

LiDAR SemanticKITTI Format (Download 14GB)

To visualize the datasets using the SemanticKITTI tools, please use this fork: https://github.com/unmannedlab/point_labeler

LiDAR Annotation SemanticKITTI Format (Download 174MB)

LiDAR Scan Poses files (Download 174MB)

LiDAR Split File (75KB)

Camera Instrinsic (Download 2KB)

Camera to LiDAR (Download 3KB)

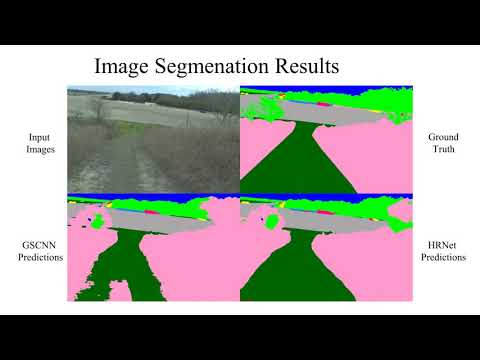

| models | sky | grass | tr ee | bush | concrete | mud | person | puddle | rubble | barrier | log | fence | vehicle | object | pole | water | asphalt | building | mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HRNet+OCR | 96.94 | 90.20 | 80.53 | 76.76 | 84.22 | 43.29 | 89.48 | 73.94 | 62.03 | 54.86 | 0.00 | 39.52 | 41.54 | 46.44 | 9.51 | 0.72 | 33.25 | 4.60 | 48.83 |

| GSCNN | 97.02 | 84.95 | 78.52 | 70.33 | 83.82 | 45.52 | 90.31 | 71.49 | 66.03 | 55.12 | 2.92 | 41.86 | 46.51 | 54.64 | 6.90 | 0.94 | 44.18 | 11.47 | 50.13 |

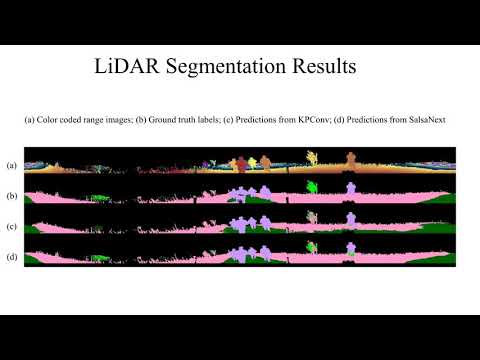

| models | sky | grass | tr ee | bush | concrete | mud | person | puddle | rubble | barrier | log | fence | vehicle | object | pole | water | asphalt | building | mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SalsaNext | - | 64.74 | 79.04 | 72.90 | 75.27 | 9.58 | 83.17 | 23.20 | 5.01 | 75.89 | 18.76 | 16.13 | 23.12 | - | 56.26 | 0.00 | - | - | 40.20 |

| KPConv | - | 56.41 | 49.25 | 58.45 | 33.91 | 0.00 | 81.20 | 0.00 | 0.00 | 0.00 | 0.00 | 0.40 | 0.00 | - | 0.00 | 0.00 | - | - | 18.64 |

To reproduce the results, please refer to here

Data included in raw ROS bagfiles:

| Topic Name | Message Tpye | Message Descriptison |

|---|---|---|

| /img_node/intensity_image | sensor_msgs/Image | Intensity image generated by ouster Lidar |

| /img_node/noise_image | sensor_msgs/Image | Noise image generated by ouster Lidar |

| /img_node/range_image | sensor_msgs/Image | Range image generated by ouster Lidar |

| /imu/data | sensor_msgs/Imu | Filtered imu data from embeded imu of Warthog |

| /imu/data_raw | sensor_msgs/Imu | Raw imu data from embeded imu of Warthog |

| /imu/mag | sensor_msgs/MagneticField | Raw magnetic field data from embeded imu of Warthog |

| /nerian_stereo/left_image | sensor_msgs/Image | Left image from Nerian Karmin2 |

| /nerian_stereo/right_image | sensor_msgs/Image | Right image from Nerian Karmin2 |

| /odometry/filtered | nav_msgs/Odometry | A filtered local-ization estimate based on wheel odometry (en-coders) and integrated IMU from Warthog |

| /os1_cloud_node/imu | sensor_msgs/Imu | Raw imu data from embeded imu of Ouster Lidar |

| /os1_cloud_node/points | sensor_msgs/PointCloud2 | Point cloud data from Ouster Lidar |

| /os1_node/imu_packets | ouster_ros/PacketMsg | Raw imu data from Ouster Lidar |

| /os1_node/lidar_packets | ouster_ros/PacketMsg | Raw lidar data from Ouster Lidar |

| /vectornav/GPS | sensor_msgs/NavSatFix | INS data from VectorNav-VN300 |

| /vectornav/IMU | sensor_msgs/Imu | Imu data from VectorNav-VN300 |

| /vectornav/Mag | sensor_msgs/MagneticField | Raw magnetic field data from VectorNav-VN300 |

| /vectornav/Odom | nav_msgs/Odometry | Odometry from VectorNav-VN300 |

| /vectornav/Pres | sensor_msgs/FluidPressure | |

| /vectornav/Temp | sensor_msgs/Temperature | |

| /velodyne_points | sensor_msgs/PointCloud2 | PointCloud produced by the Velodyne Lidar |

ROS Bag Examples (2GB)

Sequence 00000: Synced data: (12GB) Filtered data: (23GB) Full-stack Raw data: (29GB)

Sequence 00001: Synced data: (8GB) Filtered data: (16GB) Full-stack Raw data: (22GB)

Sequence 00002: Synced data: (14GB) Filtered data: (28GB) Full-stack Raw data: (37GB)

Sequence 00003:Synced data: (8GB) Filtered data: (15GB) Full-stack Raw data: (19GB)

Sequence 00004:Synced data: (7GB) Filtered data: (14GB) Full-stack Raw data: (17GB)

The ROS workspace includes a plaftform description package which can provide rough tf tree for running the rosbag.

@misc{jiang2020rellis3d,

title={RELLIS-3D Dataset: Data, Benchmarks and Analysis},

author={Peng Jiang and Philip Osteen and Maggie Wigness and Srikanth Saripalli},

year={2020},

eprint={2011.12954},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

All datasets and code on this page are copyright by us and published under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License.

- 11/26/2020 v1.0 release

SemanticUSL: A Dataset for Semantic Segmentation Domain Adatpation

LiDARNet: A Boundary-Aware Domain Adaptation Model for Lidar Point Cloud Semantic Segmentation