Autonomous visual navigation components for drones and ground vehicles using deep learning. Refer to wiki for more information on how to get started.

This project contains deep neural networks, computer vision and control code, hardware instructions and other artifacts that allow users to build a drone or a ground vehicle which can autonomously navigate through highly unstructured environments like forest trails, sidewalks, etc. Our TrailNet DNN for visual navigation is running on NVIDIA's Jetson embedded platform. Our arXiv paper describes TrailNet and other runtime modules in detail.

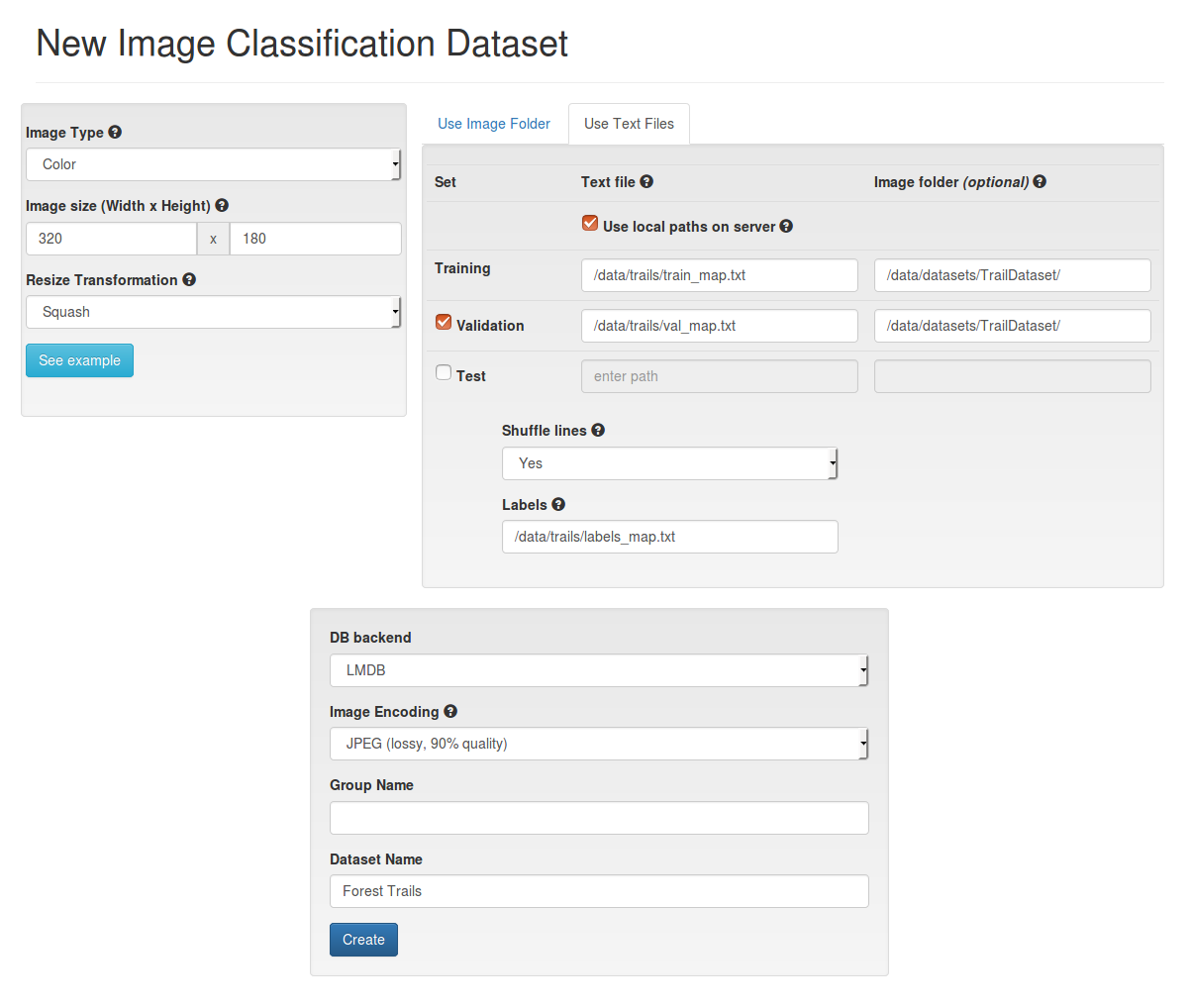

The project's deep neural networks (DNNs) can be trained from scratch using publicly available data. A few pre-trained DNNs are also available as a part of this project. In case you want to train TrailNet DNN from scratch, follow the steps on this page.

The project also contains Stereo DNN models and runtime which allow to estimate depth from stereo camera on NVIDIA platforms.

IROS 2018: we presented our work at IROS 2018 conference as a part of Vision-based Drones: What's Next? workshop.

CVPR 2018: we presented our work at CVPR 2018 conference as a part of Workshop on Autonomous Driving.

- Stereo DNN, CVPR18 paper, Stereo DNN video demo

- TrailNet Forest Drone Navigation, IROS17 paper, TrailNet DNN video demo

- GTC 2017 talk: slides, video

- Demo video showing 250m autonomous flight with TrailNet DNN flying the drone

- Demo video showing our 1 kilometer autonomous drone flight with TrailNet DNN

- Demo video showing TrailNet DNN driving a robotic rover around the office

- Demo video showing TrailNet generalization to ground vehicles and other environments

-

2020-02-03: Alternative implementations. redtail is no longer being developed, but fortunately our community stepped in and continued developing the project. We thank our users for the interest in redtail, questions and feedback!

Some alternative implementations are listed below.

- @mtbsteve: https://github.com/mtbsteve/redtail

-

2018-10-10: Stereo DNN ROS node and fixes.

- Added Stereo DNN ROS node and visualizer node.

- Fixed issue with nvidia-docker v2.

-

2018-09-19: Updates to Stereo DNN.

- Moved to TensorRT 4.0

- Enabled FP16 support in

ResNet18 2Dmodel, resulting in 2x performance increase (20fps on Jetson TX2). - Enabled TensorRT serialization in

ResNet18 2Dmodel to reduce model loading time from minutes to less than a second. - Better logging and profiler support.

-

2018-06-04: CVPR 2018 workshop. Fast version of Stereo DNN.

- Presenting our work at CVPR 2018 conference as a part of Workshop on Autonomous Driving.

- Added fast version of Stereo DNN model based on ResNet18 2D model. The model runs at 10fps on Jetson TX2. See README for details and check out updated sample_app.

-

GTC 2018: Here is our Stereo DNN session page at GTC18 and the recorded video presentation

-

2018-03-22: redtail 2.0.

- Added Stereo DNN models and inference library (TensorFlow/TensorRT). For more details, see the README.

- Migrated to JetPack 3.2. This change brings latest components such as CUDA 9.0, cuDNN 7.0, TensorRT 3.0, OpenCV 3.3 and others to Jetson platform. Note that this is a breaking change.

- Added support for INT8 inference. This enables fast inference on devices that have hardware implementation of INT8 instructions. More details are on our wiki.

-

2018-02-15: added support for the TBS Discovery platform.

- Step by step instructions on how to assemble the TBS Discovery drone.

- Instructions on how to attach and use a ZED stereo camera.

- Detailed instructions on how to calibrate, test and fly the drone.

-

2017-10-12: added full simulation Docker image, experimental support for APM Rover and support for MAVROS v0.21+.

- Redtail simulation Docker image contains all the components required to run full Redtail simulation in Docker. Refer to wiki for more information.

- Experimental support for APM Rover. Refer to wiki for more information.

- Several other changes including support for MAVROS v0.21+, updated Jetson install script and few bug fixes.

-

2017-09-07: NVIDIA Redtail project is released as an open source project.

Redtail's AI modules allow building autonomous drones and mobile robots based on Deep Learning and NVIDIA Jetson TX1 and TX2 embedded systems. Source code, pre-trained models as well as detailed build and test instructions are released on GitHub.

-

2017-07-26: migrated code and scripts to JetPack 3.1 with TensorRT 2.1.

TensorRT 2.1 provides significant improvements in DNN inference performance as well as new features and bug fixes. This is a breaking change which requires re-flashing Jetson with JetPack 3.1.